Hi, in this post I bring forward debate in the area of evaluating complex interventions. Traditional linear outcome orientated methods of evaluation rooted in positivistic assumptions, such as many standalone RCTs, while providing high levels of rigour, fail to capture emergent outcomes which are the “hallmark of complex programs” (Crane et al., 2019, p. 423). However, the popular opinion that RCTs are the ‘gold standard’ is quickly being redressed, especially for the evaluation of social programmes and even policy change. Traditional techniques have there merits and are defensible choice, but not the ‘best’!

There is no universally applied definition for evaluation and characterisations usually depend on the approach taken, and there are many different evaluation approaches available. Evaluation covers a broad remit including relevance, accessibility, comprehensiveness, integration, effectiveness, impact, cost, efficiency, sustainability. Trying to distinguish the ‘best’ evaluation approach is not straightforward, and I would argue not worth pursuing.

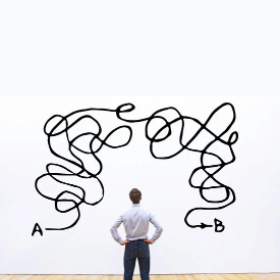

The plethora of approaches can leave you a little vexed and the traditionalistic view that Randomised Controlled Trails [RCTs] are the ‘gold standard’ because there rigorous, standardised, and generalisable, all of which is needed for evidence-based policymaking, right, may make you feel a little pushed into an area that doesn’t really align with the way you see the world or what’s needed to improve theory and practice.

The idea that RCTs are the ‘gold standard’ has been heavily debated over the past two decades, a debate that has supported Medical Research Council [MRC] guidance on developing and evaluating complex interventions to move away from experimental methods as the best and only option. Most recent MRC guidance, now a framework, published 2021, highlights the “need to maximise the efficiency, use, and impact of research” and the usefulness of alternative approaches that reflect recent practical and theoretical developments, for instance, programme theory, systems thinking, and natural experiment.

Two alterative arguments have developed, and I feel both can be reduced to an intervention complexity judgement. The complexity lens applied to the intervention, or system, will naturally lead you into certain philosophical ways of thinking and doing.

There are two established meanings which posit complexity as, (1) belonging to the intervention; and (2) belonging to the system. I found understanding how these lenses locate complexity an effective way to discern their differences and similarities, and how this measure up with the research problem, context, and importantly, my value(s) base, as I attempt to use pragmatic evaluation approach for a complex system (the Worcestershire Meeting Centres Support Programme).

Complex Intervention

For complex interventions, complexity is located in the components of intervention, which require the use of two or more research methods. However, multicomponent interventions “may not necessarily be complex, but merely complicated” (Moore et al., 2019, p. 25). Pharmacological interventions tend to exemplify complicated or ‘simple’ interventions, which are definable, and have few components that are well known, presenting less challenges for the researcher. Contrary, the MRC (Craig et al., 2013) define complex interventions by:

- The number of groups and levels of the organization which are the target group for interventions

- The number and severity of behaviours shown by intervention providers or recipients

- The number and variety of the variables in the intervention

- The degree of flexibility in the permitted intervention

These attributes suggest multifaceted causal pathways that many believe to be more answerable to pragmatic approaches that capture highly desired mechanisms for change in complex programme. However this lens tends to be driven by the desire to standardise interventions across different settings to “consistently provide as close to the same intervention as possible” (MRC, 2000). Consequently, traditional techniques such as RCTs are a defensible choice, but not the ‘best’!

The UK MRC’s guidance for developing and evaluating complex interventions has been replaced by a new framework, commissioned by the MRC and the NIHR, which takes account of recent developments in theory and methods and the need to maximise the efficiency, use, and impact of research.

Complex System

Complex systems theory attributes complexity to emergence, feedback, adaptation, and self-organisation. In this context, interventions are viewed as “events within systems” that aim to disrupt systems functioning through shifting relationships and resources, and displacing deep-rooted practices.

Complex and emergent causal pathways present considerable challenges for determining most appropriate outcomes and how best to capture and measure emergent changes, which is particularly problematic for economic evaluation where future values, costs and benefits are hard to monitor.

Traditional linear outcome orientated methods of evaluation rooted in positivistic assumptions, while providing high levels of rigour, fail to capture emergent outcomes which are the “hallmark of complex programs” (Crane et al., 2019, p. 423). The new MRC framework focuses on going beyond proving the intervention or system works, in other words, delivered its intended outcomes, to asking questions about how and under what conditions interventions cause intended and unintended change. Collective and deliberative methods of evaluation are needed.

‘Good enough’

Clearly there are practical and methodological challenges for evaluating complex interventions and systems, and depending on your worldview, there may also be a philosophical challenge to overcome if you’re working according to outdated standards.

You may be used to objective and unbiased answers to narrow questions and now need to be comfortable being broad and subjective, to provide ‘good enough’ evidence to improve. Research questions should be driven by what’s important to stakeholders at a local level in order to reduce inequalities, rather than questions that can be answered with higher levels of certainty. There is no objective truth in value, so why are we trying to find it? Value is subjective.

The opinion that RCTs are the ‘gold standard’ is quickly being redressed, especially for the evaluation of social programmes and even policy change. Just as the gold standard began to lose its popular status as the best way to run a monetary system in the 30s, the field of evaluation presents a similar shift, what I would describe as widening the goalposts, where ‘good enough’ becomes the new standard. This will allow greater scope for academics, professionals, and the public to answer new and emerging research questions – ‘critical questions’ – in order to improve and not just prove value.

Nathan

-

Craig, P., Dieppe, P., Macintyre, S., Michie, S., Nazareth, I., & Petticrew, M. (2013). Developing and evaluating complex interventions: The new Medical Research Council guidance. International Journal of Nursing Studies, 50(5), 587–592. https://doi.org/10.1016/J.IJNURSTU.2012.09.010

-

Crane, M., Bauman, A., Lloyd, B., McGill, B., Rissel, C., & Grunseit, A. (2019). Applying pragmatic approaches to complex program evaluation: A case study of implementation of the New South Wales Get Healthy at Work program. Health Promotion Journal of Australia, 30(3), 422–432. https://doi.org/10.1002/hpja.239

-

Moore, G. F., Evans, R. E., Hawkins, J., Littlecott, H., Melendez-Torres, G. J., Bonell, C., & Murphy, S. (2019). From complex social interventions to interventions in complex social systems: Future directions and unresolved questions for intervention development and evaluation. Evaluation, 25(1), 23–45. https://doi.org/10.1177/1356389018803219

-

Skivington, K., Matthews, L., Simpson, S. A., Craig, P., Baird, J., Blazeby, J. M., Boyd, K. A., Craig, N., French, D. P., McIntosh, E., Petticrew, M., Rycroft-Malone, J., White, M., & Moore, L. (2021). A new framework for developing and evaluating complex interventions: Update of Medical Research Council guidance. The BMJ, 374. https://doi.org/10.1136/BMJ.N2061

Nathan Stephens

Author

Nathan Stephens is a PhD Student and unpaid carer, working on his PhD at University of Worcester, studying the Worcestershire Meeting Centres Community Support Programme. Inspired by caring for both grandparents and personal experience of dementia, Nathan has gone from a BSc in Sports & Physical Education, an MSc in Public Health, and now working on his PhD.

Print This Post

Print This Post